- #GCLOUD SSH TUNNEL TO INSTANCE INSTALL#

- #GCLOUD SSH TUNNEL TO INSTANCE MANUAL#

- #GCLOUD SSH TUNNEL TO INSTANCE FULL#

OS Login is a GCE service that keeps your private keys with the GCE project, together with your Unix account information, including login name and UID/GID.

#GCLOUD SSH TUNNEL TO INSTANCE INSTALL#

To be able to establish the IAP tunnel, you should install the Cloud SDK, so that the gcloud command is available and authenticated. Always maintain one key per machine, be it for GitHub, for BurrMill or anything else. And if none of the machines has physically disappeared, but you see the signs that someone is using the key, you should better know from which machine the key has leaked. Sometimes people copy their private key (highly sensitive for example, GitHub keys) across machines out of convenience, but if one of these machines is lost, you’ll have to revoke the single key, which pays you back with a massive inconvenience of creating a new one for all computers you work on.

The golden rule of SSH key management is the private key never leaves the directory it was created in. It does not make sense to password-protect them, even if you normally encrypt your SSH keys. The OS Login SSH keys do not offer an additional security over IAP: anyone in possession of your Cloud SDK credential can add their own key and connect to your instances.

#GCLOUD SSH TUNNEL TO INSTANCE MANUAL#

But as long as you want to keep your keys in a neat order, and know which to remove and which to keep, manual key management is the best option. If you were to use gcloud compute ssh, it would have created the key and registered it. Sort of a catch 22 (referring to the SSH port number, of course): to add the key you need to access the machine first, but… Be in control of OS Login keys But in a dynamic environment, when machines may temporarily pop out if nothing, or be rebuilt on demand, this is not as easy as adding your key to the ~/.ssh/authorized_keys file. The tunnel allows your SSH client to connect to the server, but you need a way to authenticate to it, and the most common way to authenticate to an SSH serer is registering a public half of the key pair with the server. in the project by simply giving its name.Īt this point, if you’ve used SSH for a while, you should have noticed that one critical component is missing from the flow.

#GCLOUD SSH TUNNEL TO INSTANCE FULL#

Without going into details (you are welcome to read its source), this utility allows to connect to any uniquely named VM ✼ Full unique name of an instance also includes the zone, so that you can have two instances with the same name in two different zones. We have a supplementary script burrmill-ssh-Prox圜ommand, which attempts to locate the host name you pass in ssh command line from multiple sources. Another is that you need to pass the command the zone name (you can have the default zone set in the local configuration on any given machine), but I wanted something more flexible. I do not know about you, but I am very meticulous when it comes to managing the SSH keys kept on all machines I use, and for this task am grouping the keys and other files together. For one, it may create an SSH key for you. gcloud compute ssh is convenient but has its own drawbacks. It’s not documented but is not likely to go away: gcloud compute ssh uses this command internally, and it has to persist in one for or another.

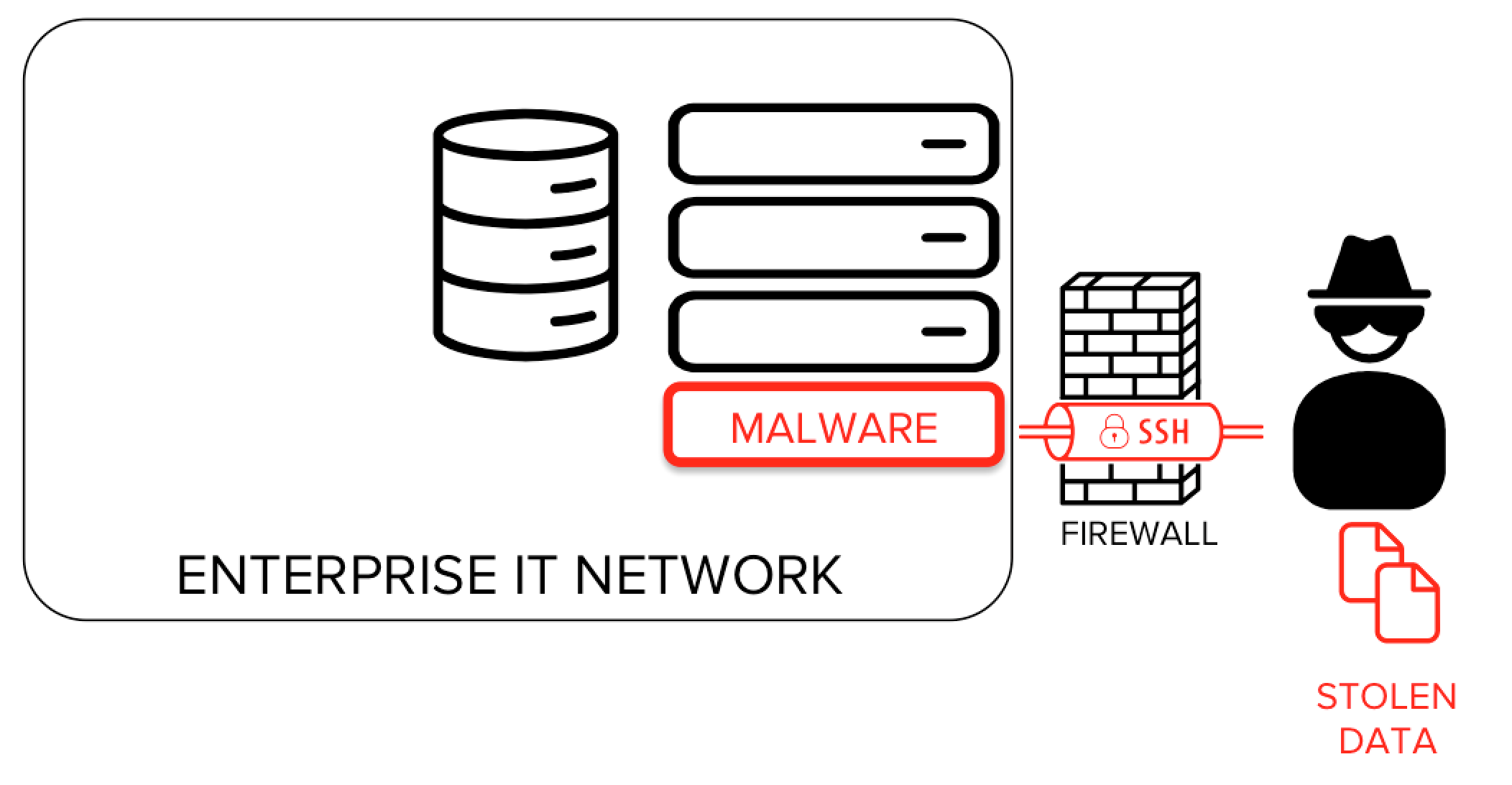

If you peeked at the documentation page liked to above, and wonder which of the two options we use: the third. If you add other accounts but do not grant them the Project Owner role, you’ll need to grant them access to tunneling, but this goes beyond our current walkthrough. As long as you are authenticated the Cloud SDK, you, as the owner of your project, are automatically granted access to the tunneling feature. We created a firewall rule to allow this IP range to connect to the SSH port 22 to any instance on the network. Connections are established from a narrow range of IP addresses, guarded by Google, so that you do not need commonly used “bastion hosts” to protect your network. To access machines inside, GCP offers an option of Identity-Aware Proxy (IAP) Tunneling. None of the VMs on your network are accessible from the Internet: this is the default firewall settings, and we did not open any ports to the whole world. In this part we’ll go through IAP tunneling feature of GCP, OS Login and its key management, and some ideas of SSH setup that will make connecting to the cluster easier.

0 kommentar(er)

0 kommentar(er)